Research

Security of machine learning (ML) systems is a relatively new topic in computer security with many concerns highlighted in the last decade through attack procedures, followed by defenses in a cat-and-mouse game. Despite these concerns, there is a rapid development of learning algorithms for applications which involve decision-making that impact individuals (e.g., hiring, loan decisions) and societies (e.g., misinformation) or safety-critical environments.

However, fundamental questions in this space such as defining security vulnerabilities or developing algorithms to test and verify desirable properties with guarantees remain open. The challenge is that, unlike software systems or hand-written rules, ML models are learned through complex stochastic procedures from data coming from an unknown distribution. We often do not have ground truth of what they should be learning, and it is difficult to perfectly capture the highly non-linear and high-dimensional internals of the models. On top of all this, the adversaries can be adaptive or employ their own ML strategies.

Refutability Tools for Machine Learning

My goal is to provide principled approaches to provide refutability in ML security.

-

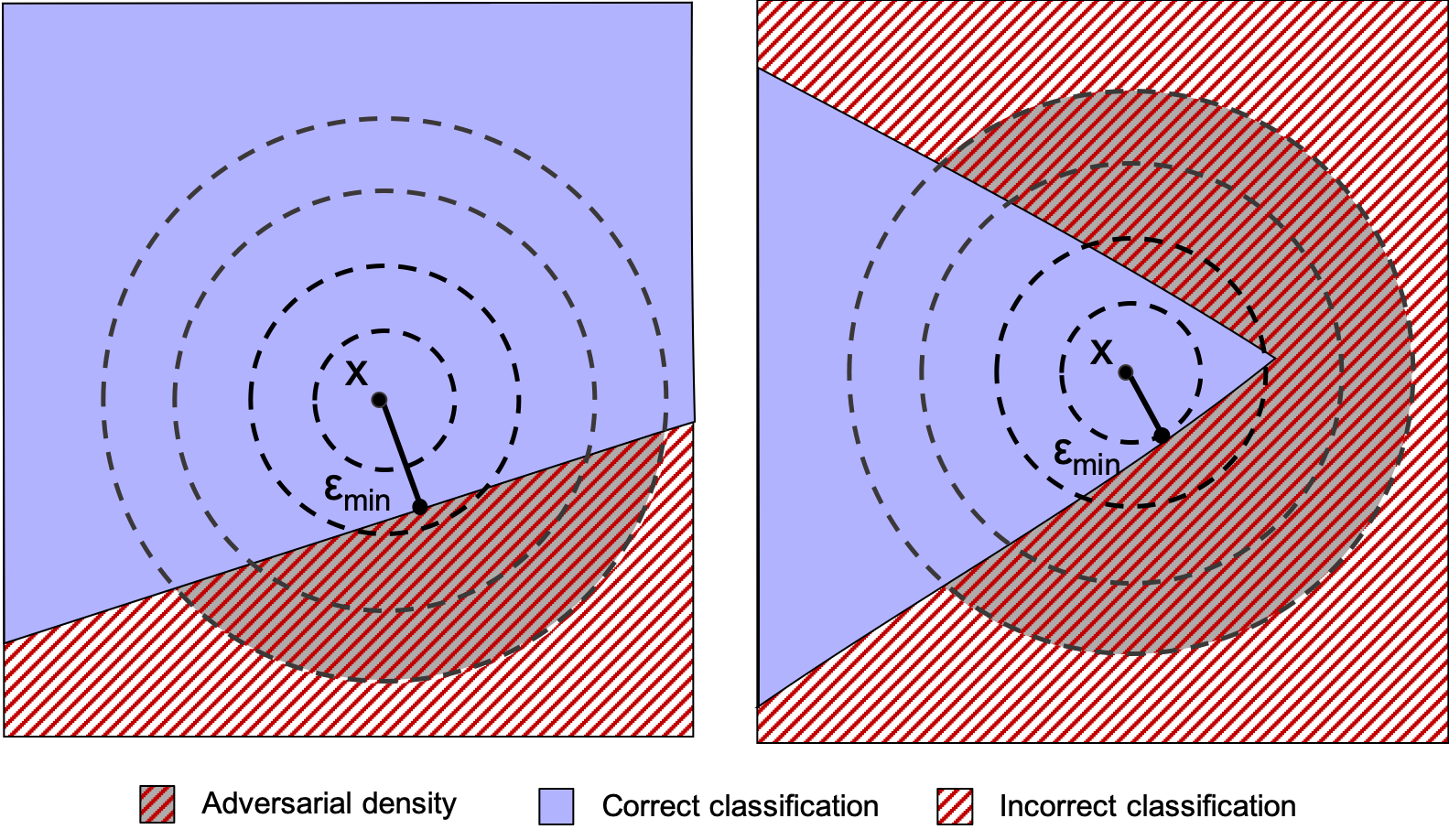

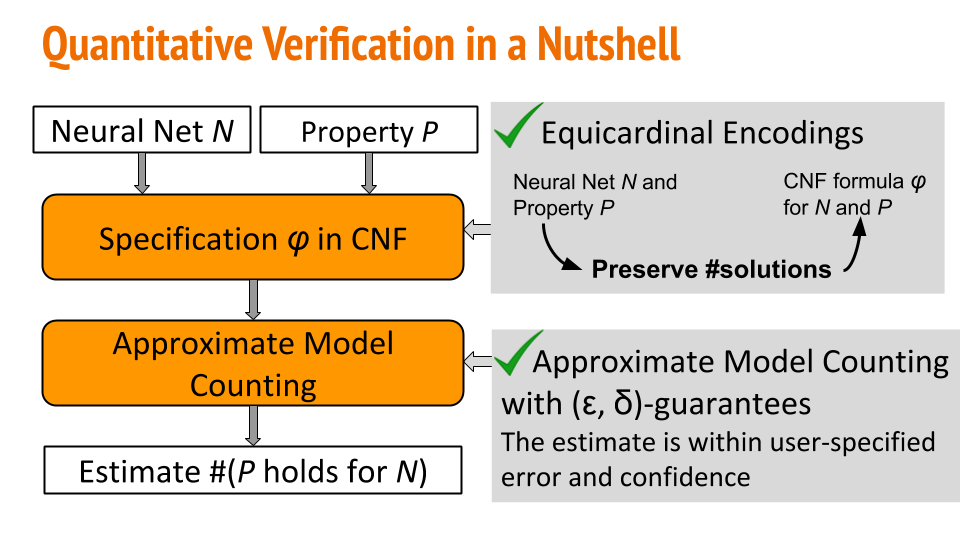

How can we check that a given ML model satisfies a desirable property? My research thus far has focused on building quantitative verification frameworks for ML properties such as robustness, fairness and susceptibility to data poisoning attacks for neural networks [CCS 19, ICSE 21]. We identified different trade-offs in unlearning quality for in- and out-of-distribution data, highlighting disparate impact of gradient-ascent for unlearning [Safe GenAI 24].

-

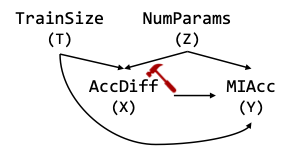

How can we check that ``off-the-shelf’’ ML algorithms have desirable properties? We proposed a systematic approach to analyzing the causes of membership inference attacks through causality for models trained via standard training [CCS 22a]. We showed that executions of stochastic gradient descent (the most widely used basis for current learning algorithms) can be collision-free under a set of mild checkable conditions [CCS 23].

On-going Work. My on-going research is on developing algorithms to enhance refutability for ML security. We need more rigor in evaluating attacks and defenses. The next challenge is extending these analyses to dynamic and interactive environments such as ML models interacting with other models and software systems.

Provably Secure Learning Algorithms

Privacy. I have worked on privacy for graph data in federated setups. The goal was to design algorithms such as hierarchical clustering [CCS 21] or graph neural networks [CCS 22b] that satisfy the strong notion of local edge differential privacy.

On-going Work. I am interested in robustness to adversarial corruptions at different stages of the learning process (training, inference, retrieval of data) which can affect the outcome of the ML system. I am interested in designing more robust learning algorithms and more verifiable ML models with provable guarantees for practical applications.

Learnability of Rules

I have worked on inferring data flow and architectural semantics of instructions using machine learning techniques for security analyses. Specifically, our approach infers taint rules with minimal knowledge (e.g., input-output examples of instructions) that can then be used to build an architecture-agnostic taint engine [NDSS 19]. In addition to new approaches to infer inductive rules, I have worked on algorithms guaranteeing generalization in programming by example. Specifically, we proposed a dynamic algorithm for computing the required number of samples to guarantee generalization on the fly. We show how to integrate it in two well-known synthesis approaches [FSE 21].

On-going Work. I am interested in combining more traditional deductive approaches with learning-based approaches for more trustworthy systems. In this context, trustworthiness is defined by explainable representations and generalizability.

Software Tools

I have released these projects and benchmarks as open-source. Here are some project-specific pages and descriptions.